Folders with high file counts

We field a lot of support requests, and similar to a doctor's office, we see some extreme cases. One of those interesting extreme cases are folders with high file counts. Any time a folder has more than a few thousand items in it, the filesystem is going to be a lot slower when working with that folder. Adding a new file, for example, requires that the filesystem compare the new item name to the name of every other file in the folder to check for conflicts, so trivial tasks like that will take progressively longer as the file count increases. Gathering the enormous file list will also take progressively longer as the list gets larger. The performance hit is even more noticeable on rotational disks and network volumes, so we often see these sticking out in backup tasks.

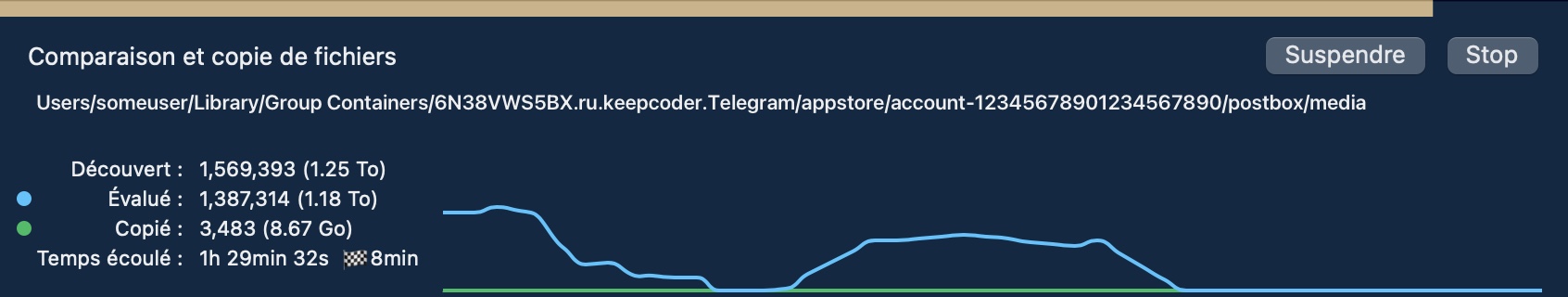

Sometimes high folder counts can bring a backup task to a halt

Last week, one of our users found the task as shown above. Upon closer analysis, we determined that the "media" folder had 181,274 files in it. In other words, more than 10% of the files on the whole startup disk were in that "media" folder. In extreme cases like this, the delay to retrieve a file list can be so long (i.e. longer than 10 minutes) that the task aborts with an error, e.g.:

The task was aborted because a subtask did not complete in a reasonable amount of time. Or,

The task was aborted because the destination filesystem is not responding.

These are typically pretty wild cases that benefit from some human intervention. For example, I recall seeing an AddressBook application support folder that had more than 2.5 million image thumbnails in it. Nobody has that many contacts! That wasn't an isolated incident, either, we've seen that same AddressBook folder implicated at least a half dozen times. Cases like that usually point out a software failure in the application that is creating the files – a failure to sanity-check its data store, or to put a sane limit on how many log files it creates. Sometimes, like in the Telegram example above, it's just short-sighted design.

For a contrasting example, consider how Mail organizes a potentially astronomic list of files. If you navigate to the hidden Library folder in your home folder, then to Mail > V10 > {any UUID} > {any mailbox} > {another UUID} > Data, you'll see folders named by number, four layers deep, until you finally get to a Messages folder with actual files in it. While this nested hierarchy makes it annoying for a human to find a specific email file, that organization limits any individual folder's file count and optimizes Mail's ability to quickly collect those resources from the filesystem.

Some data stores with potentially-high file counts

Here's a list of some common culprits we've seen over the last couple of years. If you want to look for these on your own Mac, note that you can hold down the Option key and choose Library from the Finder's Go menu to get to that hidden Library folder in your home folder.

- /Users/yourname/Library/Application Support/AddressBook

- /Users/yourname/Library/Application Support/Slack/Cache/Cache_Data

- /Users/yourname/Library/Calendars/Calendar Sync Changes

- /Users/yourname/Library/Group Containers/6N38VWS5BX.ru.keepcoder.Telegram/appstore/account-{youracct#}/postbox/media

- /Library/Application Support/Fitbit Connect/Minidumps

- /Library/FileMaker Server/Data/Backups

Some of these can safely be deleted if you find crazy-high file counts. In some cases, though, you might want to reach out to the software developer to see if they can make a change to how their data is stored to make filesystem access faster. Keep in mind, the performance implications of these high folder counts aren't limited to the backup task, they could also make the application performance slower, so it's often to the benefit of the developer to make an adjustment.