File Copying Olympics: How File Size Impacts the Race for Performance Gold

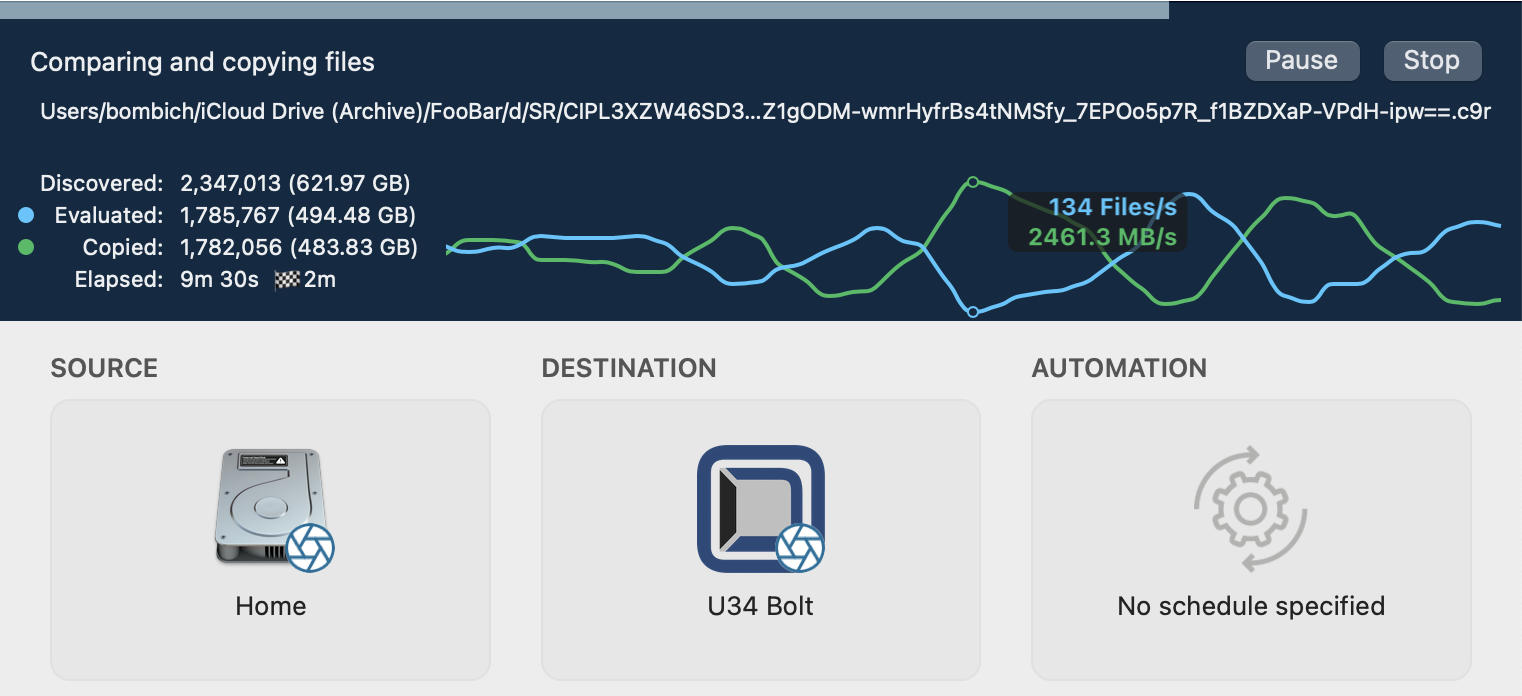

I had the opportunity to evaluate the new U34 Bolt from Oyen, and I was only disappointed that this device doesn't literally scream or burn a hole through the desk. While delivering a stunning 3.1GB/s of sustained throughput, it was completely silent and no warmer than a cup of coffee (120°F/49°C). As exciting as that was, though, I thought this would be a good opportunity to explore why we don't always see peak performance from a storage device.

Please note that we never accept any type of compensation for product recommendations. The only benefit we receive from recommendations is positive experiences with our software.

Interface performance vs. device performance vs. filesystem performance vs. software performance

Perhaps once a month we'll get a comment, "Black Magic Speed Test shows XX MB/s, but the backup only gets YY MB/s. What gives?". Less frequently people will wonder how to find that 40Gb/s performance that Thunderbolt boasts. First, let's address the math that may not be obvious. "Gb/s" is not the same as "GB/s"; Gb/s is "Gigabits per second", GB/s is "Gigabytes per second". There are 8 bits in a byte, so 40Gb/s is comparable to 5GB/s. But, interface performance isn't presented as bytes transferred because the raw data of your files isn't the only thing that has to be passed across the interface. Roughly 2% of the communication is used for encoding. 40Gb/s looks better in marketing than 4.923GB/s, so that's what we see in print for 4th generation Thunderbolt devices.

That value only demonstrates what the Thunderbolt interface is capable of, though; naturally a rotational disk attached via Thunderbolt can never dream of achieving that throughput. An NVMe-based storage device, however, comes a lot closer to reaching that potential. But even here we see some wide variation where the rubber meets the road.

What weighs more, a ton of feathers or a ton of bricks? OK, sure, we've all heard that one. Which takes longer to copy, 10GBs of 1GB files (10 files) or 10GBs of 10KB files (1 million files)? This one isn't a trick question — both data sets are 10GB, but the smaller files will take longer to copy, even on blazing-fast storage. Orders of magnitude longer! This is where we start to see filesystem performance and the efficiency of software having an effect on throughput. If you want to take full advantage of the speed of your hardware, you need faster software.

I ran a few different tests that evaluated the U34 Bolt hardware and a collection of popular file copying applications. I won't name the competitors; I'm not doing these evaluations to embarrass anyone, only to demonstrate that not all software will achieve the same performance on the same hardware. I excluded a third competitor that was simply a skin on top of rsync because the max throughput it achieved to the Bolt was just 211MB/s; its 10-15X longer run times blow out the scale of the chart. In all of the tests below, the test system is an Apple Silicon (M1) MacBook Air running macOS Sequoia 15.1 beta 1. The source data sets reside on the internal NVMe storage, the destination is an 8TB Oyen U34 Bolt attached via a Thunderbolt 4 cable. Each performance test was run twice and the result presented (in seconds) is the best of the two.

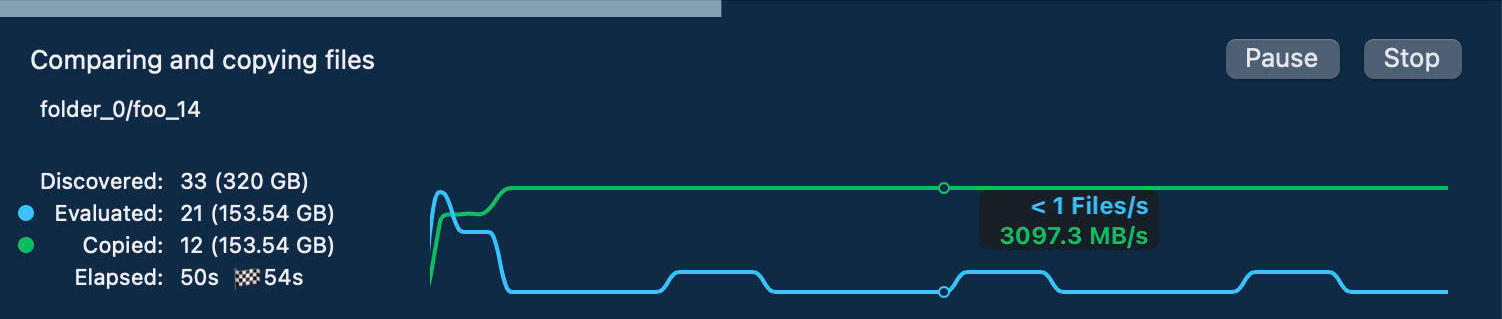

First, I created a test data set of 32x10GB files. This "Large Files" test is intended to demonstrate the peak performance of the hardware, factoring out most of the effects of filesystem performance. CCC was the only copier to sustain >3GB/s on this hardware.

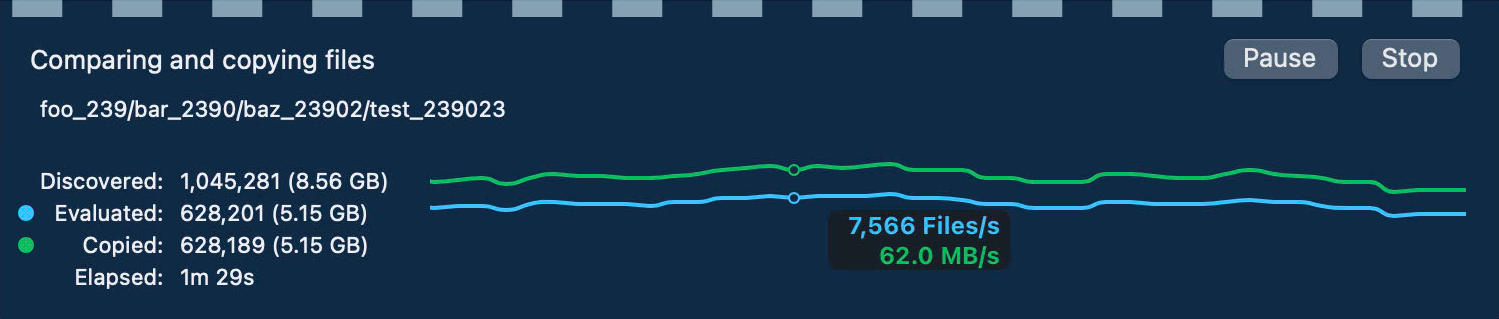

Next I created a "Small Files" data set to accentuate the effects of filesystem performance, and show how efficiently each copier deals with the filesystem transactions required to copy this many files and folders. This data set consists of 1.22 million 8KB files (total of 10GB) distributed in a 3-level-deep hierarchy of folders, 10 files or folders per folder (135,562 total folders). This is 30x less data than the previous test; had I aimed for the same disk usage, these tests would have taken hours.

Lastly, I created a data set that resembles the typical startup disk — a mix of small and large files, but primarily weighted to smaller files. This "Typical" data set has 2 million files, 22K folders, and 108.88GB. Again, this is not an enormous data set, it hardly scratches the surface of the 8TB U34 Bolt. But the startup disk is anemic in my MacBook Air, and the smaller data set is still large enough to see the effects of filesystem and copier performance.

Why do we get so much less throughput when copying smaller files?

Simply put, it's the constant din of interruptions. If Usain Bolt had to pause every meter to pick up a gold medal from the track, there's no way he'd cover 10 meters per second. It's the same with copying files; copying a single file involves a series of filesystem transactions that interrupt the bulk flow of data. Here is an example of transactions you'd need on the destination:

- Open a new file for writing

- Write data, typically in 1-2MB chunks

- Close the file

- Rename the file (if a temporary file was required, e.g. when replacing an existing file)

- Set any extended attributes on the file

- Apply file attributes (e.g. permissions, ownership, modification date, creation date, file flags)

Those transactions have a non-negligible cost. For example, simply opening a new file is not instantaneous. Opening a new file creates the filesystem entry, and the filesystem has to verify that the name doesn't conflict with other files in the same folder. Especially when a folder contains lots of other files and folders already, those name comparisons can start to take a long time. Have you ever noticed how long Finder can spend deleting several thousand files from the Trash? It's the same deal, those filesystem transactions aren't "free".

At a smaller scale, e.g. when you're copying just 32 files, the time that it takes to perform those ~200 filesystem transactions is dwarfed by the time it takes to write 320GB of data, so they go unnoticed and we can hit peak throughput. But when that 200 transactions becomes 7.3 *million* transactions, now we start to see the impact of those transactions. This is where the file copiers either shine or fizzle; developers have to invest a lot of time and talent into a file copier to get it to harmonize with a filesystem and to take advantage of the performance characteristics of modern storage.

CCC's dynamic performance chart does a nice job of visualizing the performance differences that occur when copying small files vs. large files (in fact, that's specifically why we developed it!). In the example below, we can see the green "throughput" line defiantly mirroring the blue "files/s" line, demonstrating that we just can't have both high throughput and really high files processed per second.

Does the Black Magic Speed Test provide relevant results?

If you're buying storage for large files, yes. Bear in mind that the Black Magic Speed Test was created by AJA Video Systems as a way for media content producers to determine if their storage would be suitable for live capture of various video codecs. That specific use case involves sustained writes of very large files without the constant interruption of filesystem transactions. That not only allows the storage to demonstrate its full bandwidth, it proves whether the storage can maintain that level of performance such that live video capture isn't going to drop frames. If that's the question you're trying to answer, then the BMST results will be very relevant for you, and should also be similar to the performance you'll see if you were to back up large media files from a U34 Bolt to another U34 Bolt.

Should I spend the extra money to have a really fast backup disk?

When you consider the performance of a disk you're about to purchase for backups, don't think of it in terms of how fast your backups will be. Those typically run in the background, and hopefully you don't notice these at all. Instead, think about how fast (or slow) a restore will be, especially if it is happening under stress. If you work with really large files, the faster backup disk will pay dividends when you need to restore from your backup.

For a comparison, I restored some of the files from that "Large Files" data set from the U34 Bolt and from a Samsung T7 (USB-C) back to the MacBook Air's internal storage (I only restored a subset because the internal storage started to deliver glacial write performance when free space dropped below 150GB). Restoring 120GB from the U34 Bolt and the Samsung T7 took 44s and 387s, respectively. At those rates (and on a better Mac), it would take 6 minutes to restore 1TB of data from the Bolt, but would take almost an hour to restore that from the Samsung. While the difference in restore speed for the "Typical" data set was not as dramatic, it was still twice as fast. "Your mileage may vary", but in most cases, restores from the Bolt will always be considerably faster.